- Tech Safari

- Posts

- Robbing Peter

Robbing Peter

Inside the AI labour layer everyone pretends doesn’t exist.

Hey, Sheriff here 👋

I have a question for you. How many times a week do you use ChatGPT?

And how often do you wonder how it was built, and who helped build it? If it’s not a lot of times, don’t worry.

This week, we’re diving into the workforce that helps train the world’s smartest chatbots.

But first, an announcement…

Latitude59 is coming to Kenya! 🇰🇪

Latitude59 is bringing Africa's Boldest Innovation Conversation to the ASK Dome in Nairobi, Kenya, on December 4th-5th.

Join us for two days of honest connections with founders and investors, hands-on masterclasses, and a high-stakes pitch competition featuring the top 10 startups from across the continent.

You can secure your spot today using code TECHSAFARIxL59 for a 35% discount!

Now, on to this week’s story….

The world’s smartest AI is powered by people you’ll never meet.

GPT-5 might know 200 languages, write essays in seconds, and even pass the bar exam.

But the people who taught it right from wrong?

A lot of them live in Nairobi, Lagos, Kigali, and Kampala, earning $1 to $3 an hour.

Behind the polished AI demos is a hidden workforce working brutal hours for pocket change.

Most of these people aren’t researchers, engineers, or PhDs.

They’re ordinary people doing invisible labour to make sure AI doesn’t go rogue.

Without them, AI wouldn’t work.

But to understand why, we need to explain…

The Supply Chain of AI

The official AI supply chain has four layers.

The first is Compute.

This is the hardware that makes the thinking possible.

It’s built on NVIDIA chips, GPU clusters, and data centres.

Here’s an NVIDIA H100 GPU - used in training most of the AI models you use

The second is Data.

This is the chaotic scrape of the public internet. Everything from text to audio, video, and images lives on this block.

It’s similar to the vast amounts of information humans consume about the world to make sense of it.

The third layer is Model Training.

This layer is where the brain pathways (aka “Models”) that help AI to think are built.

The main players here are the big labs building foundational models, like OpenAI, Anthropic, and DeepMind.

While DeepMind, Claude, and GPT-4 dominate the headlines, thare over 2 million AI models available online. Most are small and used for specific tasks

The fourth layer is Applications.

These are the AI-powered apps you interact with every day, from chatbots to copilots and AI agents.

These four layers get celebrated on stage at developer conferences.

They’re clean, marketable, and make for a good headline.

But they’re also incomplete.

Because between “Data” and “Model Training” sits a layer that makes everything else possible: Human Judgement.

See, no AI model is safe or useful until a human teaches it how to behave.

A GPU cannot teach a model morality, and a dataset cannot teach it empathy.

For these, you need humans.

The frontier labs, like OpenAI and Anthropic, rely on millions of micro-decisions made by human beings.

Judgments, corrections, labels, and explanations, all done in a bid to make AI safer.

This technique is called Reinforcement Learning with Human Feedback (RLHF).

It’s built on an entire labour force the size of a small country.

And without it, AI collapses into chaos.

The dark desk jobs

AI learns through two main methods: next-token prediction and reinforcement learning.

They’re often used together to train AI.

And here’s how that works:

A piece of software takes in lots of data.

It tries to figure out patterns in the data. Things like structure, relationships, sequence, etc.

It compares its work against real-world data.

If it’s right, it rewards itself with a score (like dopamine in humans). If it’s wrong, it discards its previous method and tries again.

So, when you ask AI a question, it’s not really thinking. It’s simply predicting what “should” come next, as correctly as it can.

But correctness is not the same as being appropriate.

And while we think the resulting models are “intelligent”, they’re just really good at guessing what the most appropriate combination of words is for any situation.

To make this possible, thousands of humans have to label, filter, rank, rewrite, or correct AI output during training, much like teaching a child not to swear.

These people do at least four categories of tasks:

1. Content Moderation: They filter out violence, sexual content, extremist propaganda, and child exploitation imagery.

2. Data Labelling: Tagging images, categorising text, annotating conversations, classifying emotions, and identifying objects.

3. RLHF (Reinforcement Learning from Human Feedback): The most valuable work in modern LLM training. Workers judge which responses are helpful, harmful, safe, or nonsensical, teaching models how to “sound human” and stay within moral boundaries.

4. Synthetic Dialogue Creation: Workers write thousands of sample conversations AI can learn from, making them the uncredited ghostwriters behind every chatbot.

These people make data good enough for AI to learn and safe enough for people to use.

The problem is…a lot of this ghost work isn’t merely tagging pictures of dogs.

It’s looking at humanity’s worst impulses, repeatedly, every day.

Things like murder, bestiality, and child rape.

Some workers describe panic attacks, insomnia, depression, emotional blunting, and PTSD-like symptoms from looking at harmful content on a constant loop.

Others quit after a month because these images haunt them.

The worst part?

Many can’t seek therapy due to non-disclosure agreements (NDAs), so they’re forced to carry the psychological load alone.

Over the last decade, Africa quietly became the backbone of this global AI “dark labour” market.

Kenya became a hub for moderation.

Nigeria became an annotation hub.

Ghana, Uganda, Rwanda, and South Africa followed.

Why? Because Africa has:

An English-speaking workforce

Younger population with digital skills

Lower labour costs

Time-zone alignment with Europe

A growing outsourcing ecosystem

And minimal labour protections

Outsourcing firms don’t say it directly, but the business logic is simple: Africa provides skilled digital labour at the lowest price the global market can legally get away with.

On the ground, though, this “digital work” is much closer to a sweatshop.

Carta's Build & Brew is coming to Cape Town 🇿🇦

Hey hey! 👋🏾

We're partnering with Carta to bring their flagship Build & Brew breakfast event to Cape Town on Friday, December 5th, marking Carta's first event on the African continent!

This is a morning gathering designed specifically for early-stage founders in South Africa's tech ecosystem.

Join us for a morning of:

🤝 Ecosystem connections: meet fellow founders, investors, and builders.

🚀 Startup tools demo: get hands-on with Carta Launch (free cap table platform).

☕️ Breakfast + Great Coffee on us.

📍 Venue details shared on registration.

Registration is approval-based and spots are limited to early-stage founders.

Teaching Skynet empathy for peanuts

African workers training billion-dollar models make less money per hour than it costs to buy a bottle of water in some cities.

In Kenya, Sama, an OpenAI contractor, pays $1.32 to $2 an hour.

In Nigeria, local annotation shops pay between $1 and $3 per hour.

In Uganda and Rwanda, the hourly wage can reach up to $4.

On Microwork platforms, where work is task-based, completing a task pays as little as 10 cents.

But if we compare that to global pay for the same tasks, the disparity is huge.

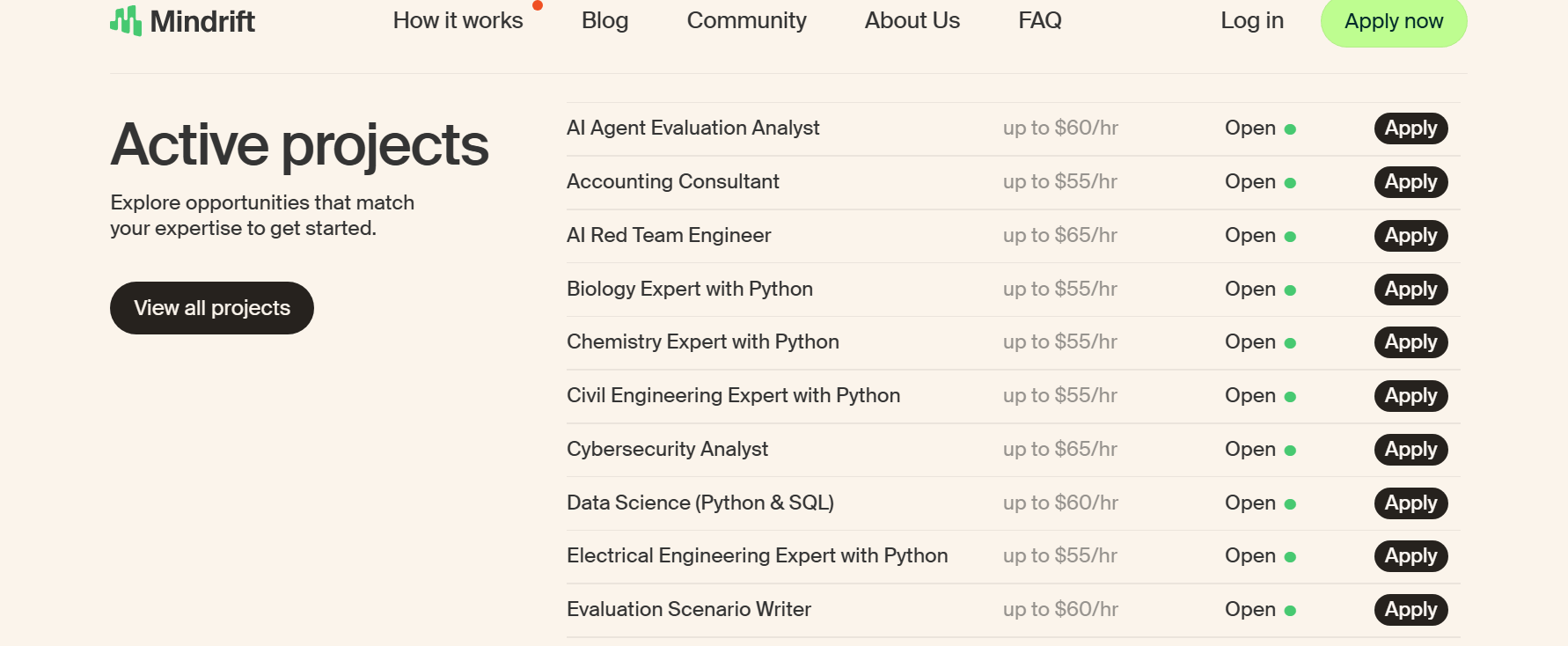

Companies like Mercor, which hire U.S./Canada/European workers, pay:

$16–$25/hour for basic annotation.

$40–$75/hour for RLHF evaluation.

$60–$120/hour for expert contractors (law, science, medicine).

“AI alignment specialist” roles on U.S. job boards frequently list:

$20–$45/hour for entry-level tasks/roles

$75k–$150k salaries for full-time evaluators

Here are some openings on Mindrift, an AI-training outsourcing platform

Many of these roles have the same task complexity and the same impact on the models.

But they pay wildly different rewards for the people working on them. Not due to skill, but location.

Even worse, they leave the workers with psychological burdens too heavy to bear.

While this happens, the AI companies built on the work they’ve done raise billions of dollars.

And the human cost never shows up in the decks.

If you’ve been looking for a word to qualify all of this…

It’s the Southern Tax

There’s a popular story across the world about the global south being a source of cheap skills.

This story built the Chinese manufacturing economy.

It built the Indian IT services industry.

And it built a global BPO (business process outsourcing) industry.

Between these three, you could say trillions of dollars in GDP are riding on this story.

But only one side of that story gets told: the employer’s.

Behind the productivity gains and cost savings lie people who often get marginally better pay, but a much worse quality of life.

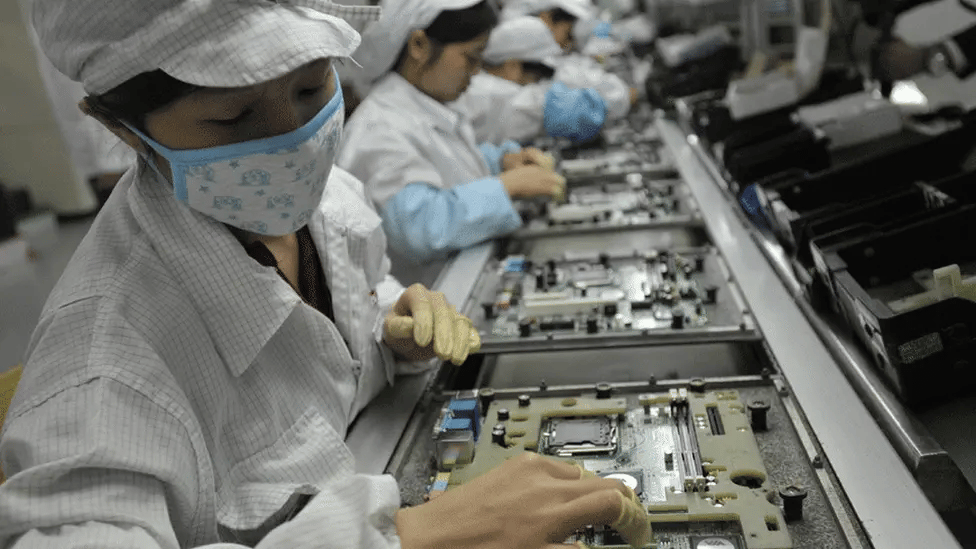

Foxconn, Apple’s biggest supplier in China, has documented cases of workers pulling 16-hour shifts during peak production.

You’ve heard a lot about iPhones being made in sweatshops, but Foxconn was ground zero for this

These workers often stand through entire shifts, repeating the same task thousands of times.

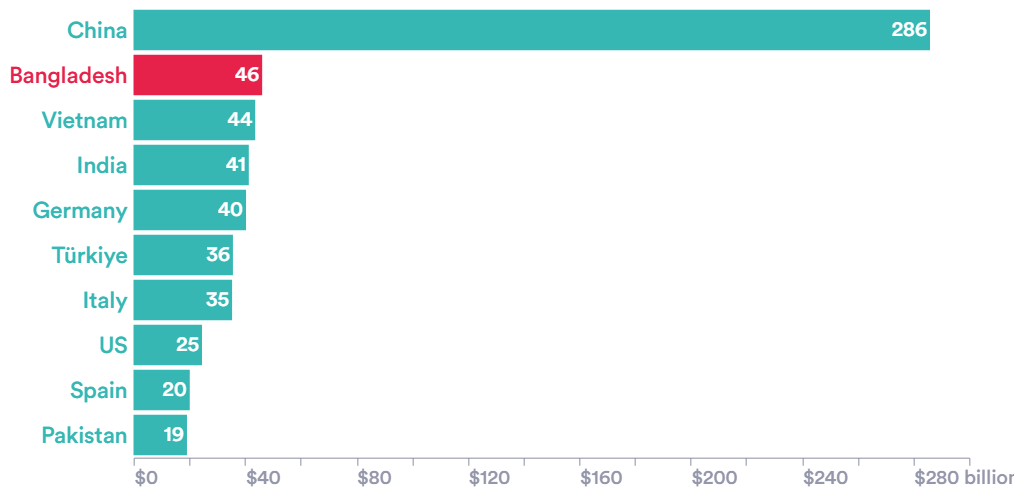

In Bangladesh, garment makers typically work 10 to 14 hours a day in unsafe buildings, making clothes for global brands.

Bangladesh is the second-largest textile exporter in the world, but that doesn’t translate into wealth for citizens working in the textile industry

They’re paid between $80 and $120 a month.

In 2013, a building called the Rana Plaza collapsed, killing 1,134 people, mostly garment makers for global brands.

And now, the same thing is playing out in AI.

Sama, a data annotator, paid Kenyans a wage barely higher than the national minimum wage.

And they got trauma as a bonus.

In 2023, when Sama got outed for this, they laid off 200+ Kenyan workers, trying to save face.

The workers took to court, and the blowback was messy.

Today, many AI data companies are trying to stay "ethical".

But for the most part, the wage gap still remains.

So, while the global outsourcing trend has given a few countries in the global south a GDP boost, it’s not translating to a better life for people on the ground.

It’s one of the biggest inequalities of our time, and it needs to be solved, because…

This time is different

AI is unlike anything the world has ever seen.

Jeff Bezos, founder of Amazon, describes it as a “horizontal enabling layer”.

This means it’ll impact anything and everything.

Companies can do more with fewer people, in less time.

This means two things:

A lot of job cuts.

A lot of economic growth.

The World Economic Forum says AI could displace 92 million jobs by 2030, while creating 170 million new, unknown jobs.

At the same time, the International Data Corporation predicts that AI will add $20 trillion to the global economy by 2030.

Africa will be greatly impacted by both trends.

It already has nearly 10% of its young population unemployed.

And the Africans working on the biggest wealth creator of the century get paid peanuts.

If we keep paying the Southern Tax, there’ll be a time when we don’t just get the shorter end of the stick, but there’ll be no stick at all.

How do you think Africa can stop paying the Southern Tax?

How We Can Help

Before you go, let’s see how we can help you grow.

Get your story told on Tech Safari - Share your latest product launch, a deep dive into your company story, or your thoughts on African tech with 60,000+ subscribers.

Create a bespoke event experience - From private roundtables to industry summits, we’ll design and execute events that bring the right people together around your goals.

Hire the top African tech Talent - We’ll help you hire the best operators on the continent. Find Out How.

Something Custom - Get tailored support from our Advisory team to expand across Africa.

That’s it for this week. See you on Sunday!

Cheers,

The Tech Safari Team

PS. refer five readers and you’ll get access to our private community. 👇🏾

What'd you think of today's edition? |

Wow, still here?

You must really like the newsletter. Come hang out. 👇🏾